Claude Cowork vs Kuse: The Web-Based Claude AI Alternative for Real Work in 2026

Compare Claude Cowork vs Kuse in 2026: access, file safety, outputs, collaboration, and pricing gates—plus real workflows for reports, spreadsheets, and presentations.

Compare Claude Cowork and Kuse on file scope and context control. Learn how AI access boundaries impact safety, precision, and real-world workflows.

AI tools increasingly promise to “work with your files.” But as AI systems move from passive assistants to active executors, a more important question has emerged: how much should AI actually be allowed to see—and who controls that boundary?

Industry research makes this concern concrete. According to recent enterprise AI reports, document-heavy workflows account for over 60% of daily knowledge work, yet they are also where AI-driven errors, hallucinations, and unintended modifications most frequently occur. As organizations adopt agentic AI features—automated planning, file editing, and task execution—context control becomes one of the most critical design decisions behind the scenes.

This is where Claude Cowork and Kuse diverge in a fundamental way.

Both tools are capable of transforming messy inputs into usable outputs. But they make very different architectural choices about file scope and context boundaries—choices that directly affect precision, safety, compliance, and user trust.

In theory, more context sounds better. In practice, it often isn’t.

Modern AI systems are no longer limited to summarizing a document or answering a question. They can plan multi-step actions, generate new files, overwrite existing ones, and chain operations together. At this level, context is not just information—it is authority.

When AI is given overly broad access, several risks emerge in real-world business settings:

At the same time, overly narrow context can reduce usefulness, forcing users to manually reassemble information that AI could have helped synthesize.

This tension—between autonomy and control—is now central to AI product design. File scope is no longer a technical implementation detail; it is a core workflow decision that determines whether AI feels trustworthy, predictable, and safe to use in professional environments.

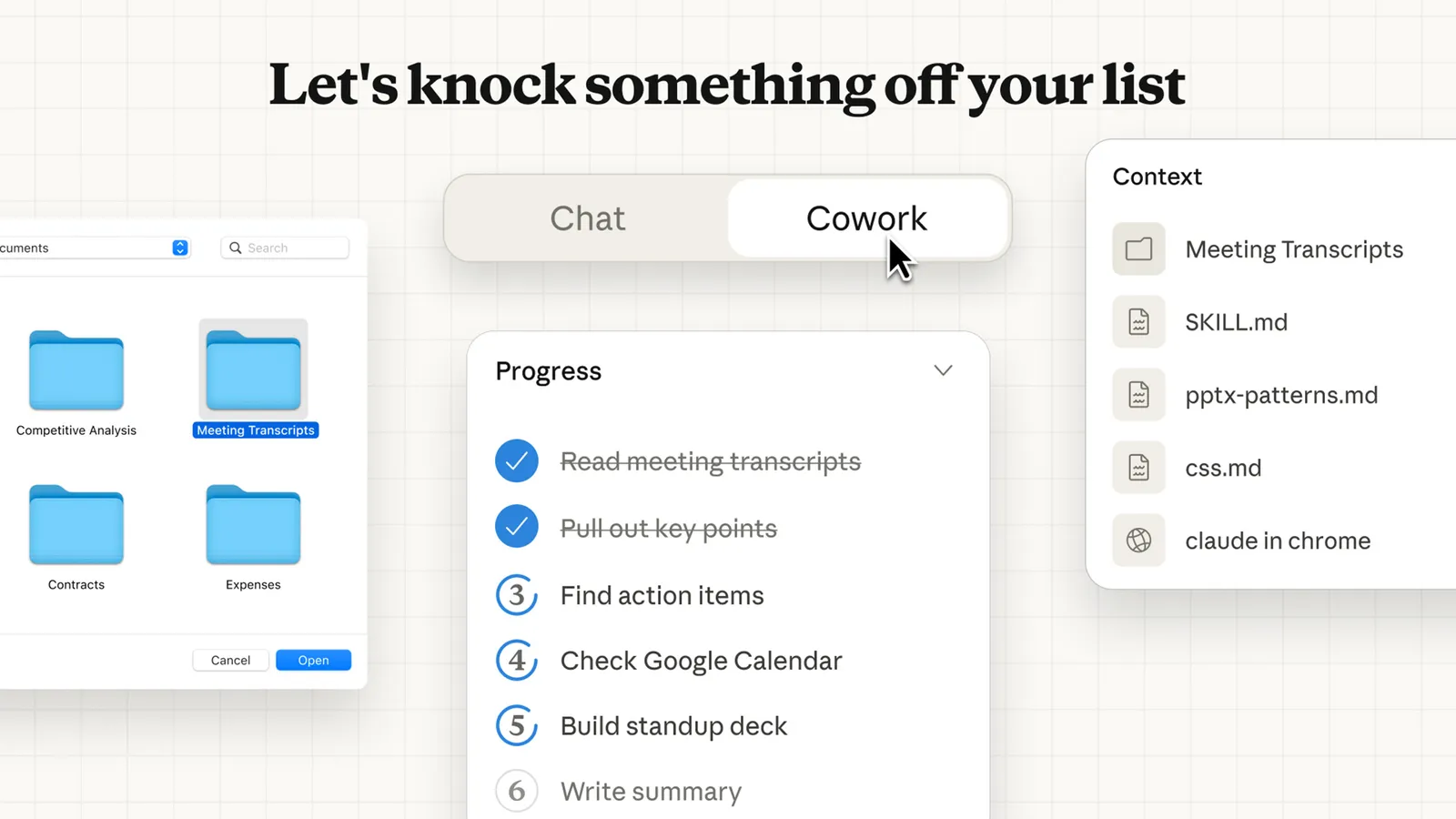

Claude Cowork represents Anthropic’s push toward agentic execution. It is designed to move Claude from a conversational assistant into a true “coworker” capable of carrying out complex tasks on a user’s behalf.

To enable this, Claude Cowork operates as a desktop-based system on macOS with access to a user-authorized local folder. Within that scope, Claude can read existing files, modify them, create new artifacts, and write results directly back to disk. This design allows Claude to plan tasks, break them into subtasks, and execute them sequentially or in parallel—often over extended sessions.

The strength of this approach is clear: Claude Cowork can operate across large collections of interrelated files and perform work that would otherwise require manual coordination. The tradeoff is that context is broad by default, and the system relies on user judgment, clear instructions, and careful oversight to prevent mistakes from cascading.

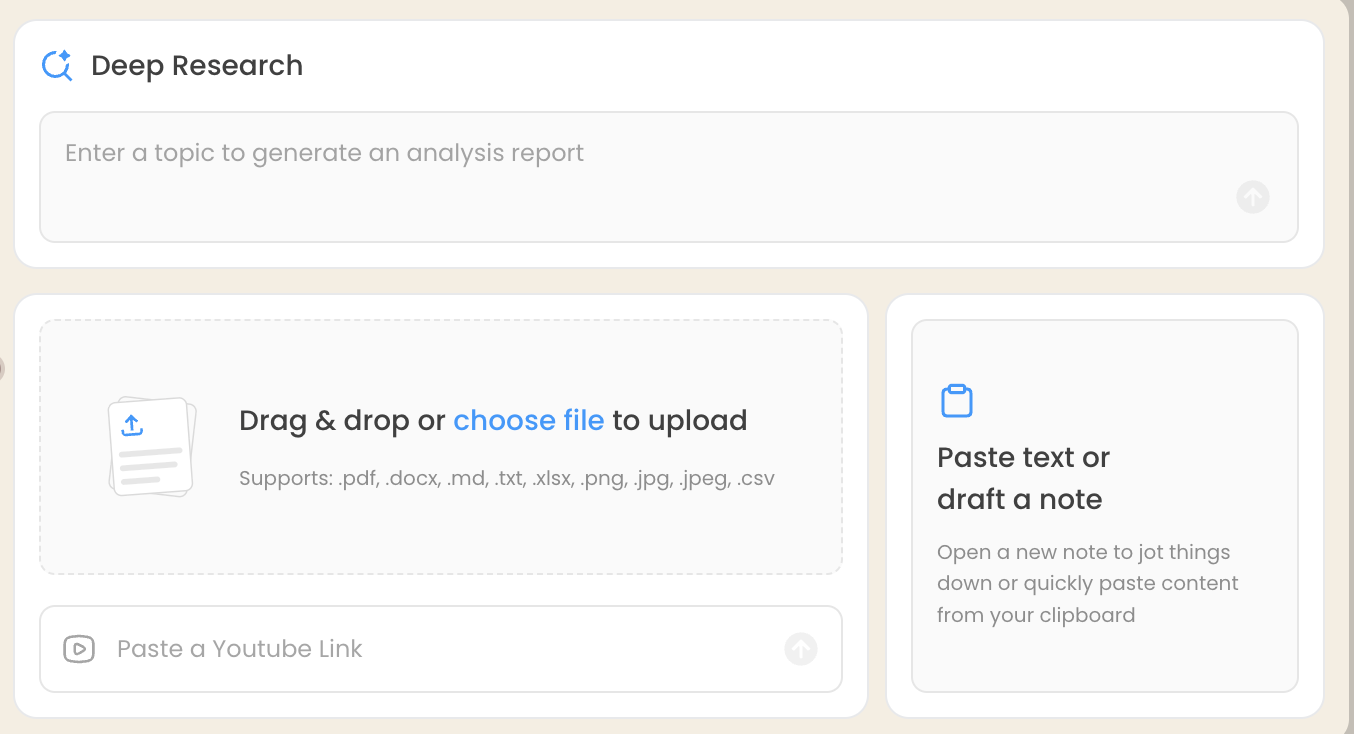

Kuse approaches the same problem from a different angle. Rather than granting AI ambient access to a file system, Kuse is built around intentional input selection inside a browser-based workspace.

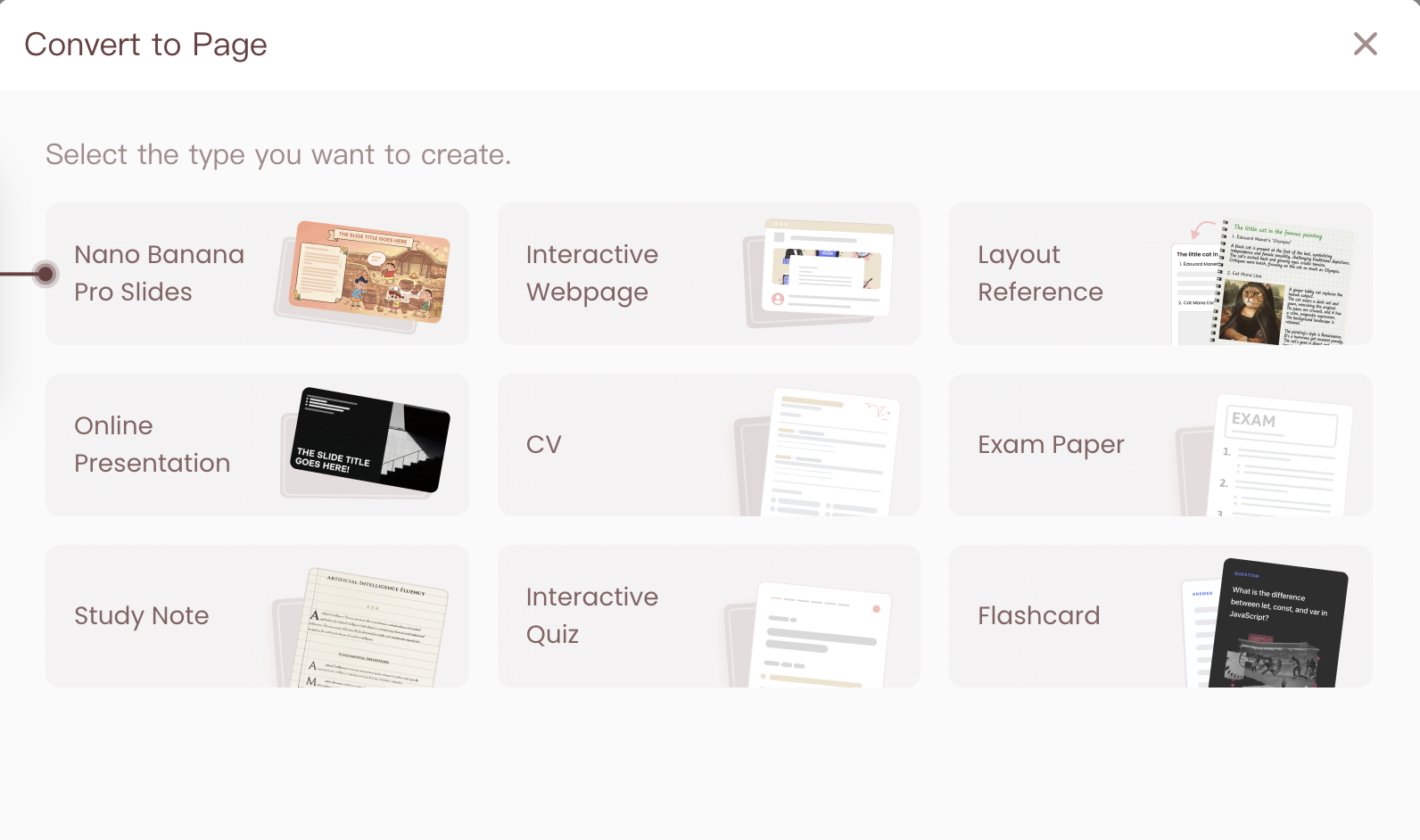

Users explicitly upload or reference the materials they want AI to work with. The AI’s context is limited to those inputs—no more, no less. From there, Kuse focuses on turning that curated context into structured, professional deliverables such as Excel files, documents, PDFs, or HTML outputs.

This design reflects a belief that context should be defined by humans, not inferred by AI. Kuse prioritizes predictability, reviewability, and collaboration, making it easier to share outputs with teammates or clients without exposing local file systems or unrelated materials.

In Claude Cowork, context begins with a folder. Once the user grants access, Claude can reason across everything inside that directory, forming its own understanding of which files matter for a given task. This is particularly effective when information is fragmented across many documents and relationships between files are essential to the outcome.

However, this power comes with complexity. The AI must infer relevance, which means context can become noisy. Ambiguous instructions may lead to unintended edits, and errors can ripple across multiple files before being noticed. Claude mitigates these risks by surfacing its plans and asking before taking major actions, but the scope itself remains intentionally broad.

Kuse deliberately narrows the scope. Context is defined at the file or input-set level, and the AI never sees anything the user has not explicitly provided. This makes outputs more predictable and easier to validate, especially when precision matters.

By optimizing for deep interaction with a single document or dataset at a time, Kuse emphasizes structure and clarity over exploration. The AI does not attempt to roam across a file system or guess what might be relevant. Instead, it focuses on producing high-quality outputs from clearly bounded inputs—a tradeoff that favors control and reliability.

The answer depends on how much autonomy you want AI to have—and how much responsibility you are willing to share with it.

If your work requires an AI agent to reason across many local files, take initiative, and execute long-running tasks with minimal human intervention, Claude Cowork’s broader scope is a powerful advantage.

If your priority is predictability, safety, and clear boundaries—especially in collaborative, regulated, or client-facing workflows—Kuse’s explicit context control is often the better fit.

Claude Cowork treats context as something AI can explore.

Kuse treats context as something humans should define.

Neither philosophy is universally better. They are optimized for different types of work, different risk tolerances, and different expectations of AI autonomy.

As AI continues to move closer to execution rather than assistance, understanding how a tool scopes and controls context may matter more than the model powering it.

Compare Claude Cowork vs Kuse in 2026: access, file safety, outputs, collaboration, and pricing gates—plus real workflows for reports, spreadsheets, and presentations.

Discover 8 real-world Claude Cowork use cases—from file organization to research synthesis and spreadsheet automation. See how agentic AI actually works in practice, plus when alternatives make sense.

Explore the top 10 Claude Cowork alternatives in 2026. Compare access, collaboration, file safety, outputs, and pricing to find the best Claude AI alternative for real work.

Learn how to set it up, what it can do, real use cases, risks, and when to consider alternatives.