AI Pipeline Workflow: What It Is and How It Works

Learn what an AI pipeline workflow is, how it works end-to-end—from data ingestion to deployment and monitoring—and how to design AI pipelines that ship reliable models into production, not just prototypes.

What Is an AI Pipeline Workflow?

An AI pipeline workflow is the structured, automated system that transforms raw data into a production-ready AI capability. Rather than relying on disconnected scripts, fragile notebooks, or manually triggered jobs, an AI pipeline workflow defines a repeatable sequence of stages that reliably move data through ingestion, transformation, modeling, deployment, and ongoing monitoring.

At a practical level, AI pipelines exist to solve a very common problem: the gap between experimentation and production. Many organizations can build a model that performs well in isolation, but far fewer can operate that model continuously inside real business workflows. An AI pipeline workflow closes this gap by ensuring that every step—data preparation, training, validation, serving, and retraining—runs consistently, observably, and at scale.

A well-designed pipeline treats each phase as an independent but connected system. Data ingestion is automated and resilient to upstream changes. Validation enforces quality gates so corrupted or incomplete data never reaches models. Feature engineering is standardized so that training and inference stay aligned. Training and evaluation are triggered by data freshness or performance thresholds rather than manual intervention. Deployment mechanisms ensure predictions reach downstream systems reliably. Monitoring closes the loop, detecting drift and triggering retraining when necessary.

If AI workflows represent what the business does—routing tickets, pricing products, scoring risk, generating content—then AI pipeline workflows represent how intelligence is created and maintained. Without a dependable pipeline behind the scenes, even the most sophisticated AI workflows degrade over time and eventually fail.

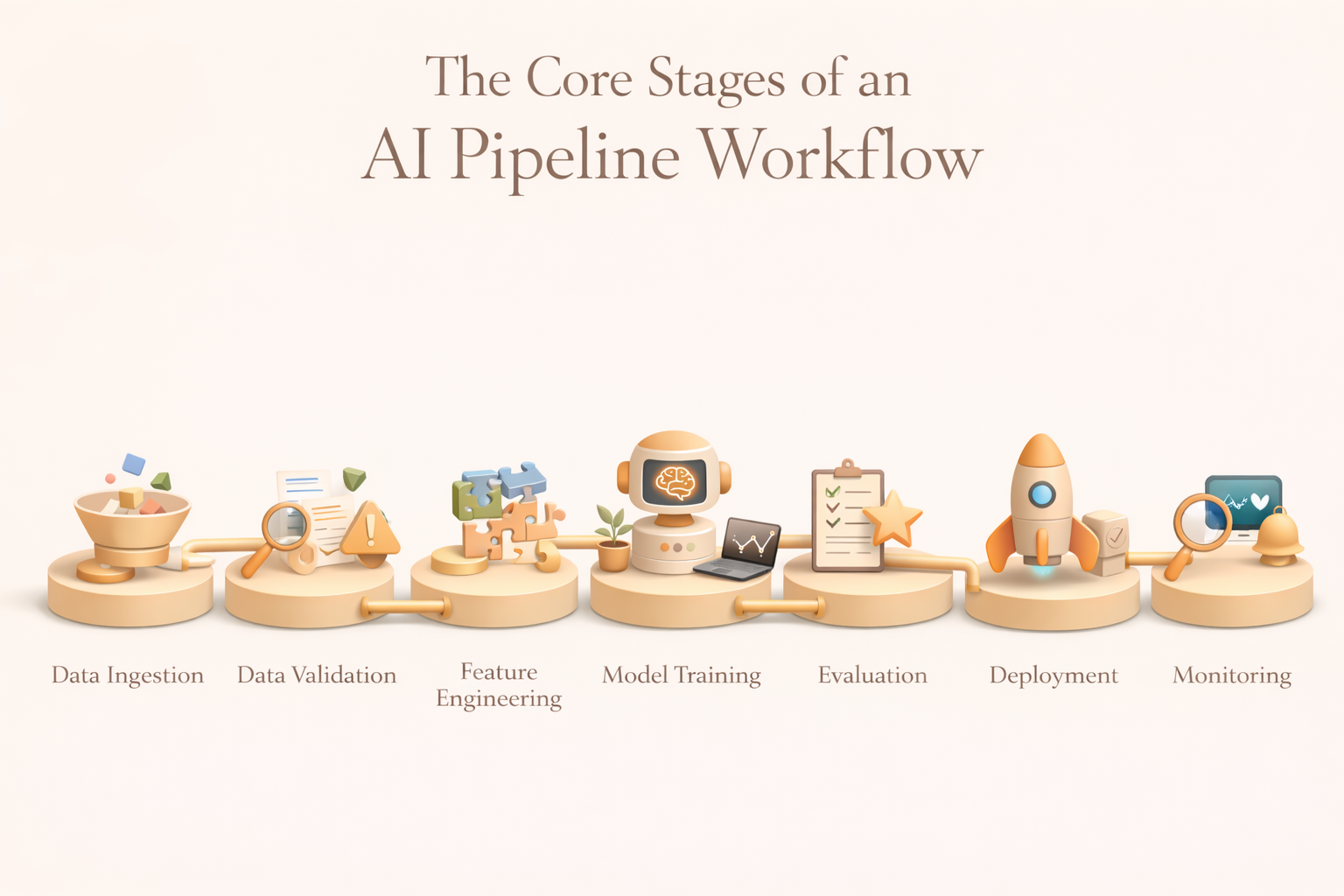

The Core Stages of an AI Pipeline Workflow

Although tools and implementations vary, most production AI pipelines follow a consistent structure. Each stage exists for a reason—and weaknesses in any one stage compound downstream.

1. Data Ingestion

Data ingestion is the entry point of the pipeline. Its role is to reliably collect raw data from multiple sources and deliver it into a controlled environment where downstream processing can occur.

Typical sources include transactional databases, event streams, cloud storage, SaaS applications, logs, sensors, and external APIs. Effective ingestion handles schema evolution, supports batch and streaming modes, and provides guarantees around delivery and completeness. Mature teams centralize ingestion into a data lake or warehouse to establish a single source of truth and reduce coupling between systems.

2. Data Validation & Preprocessing

Raw data is rarely usable as-is. Validation and preprocessing protect the pipeline from silent failures caused by missing values, schema drift, duplication, or corrupted records.

This stage enforces quality constraints, standardizes formats, removes noise, and applies privacy or compliance transformations when required. Crucially, validation is automated. If anomalies exceed defined thresholds, the pipeline can pause execution, alert owners, or route data for manual review. This transforms data quality from an afterthought into an operational guarantee.

3. Feature Engineering

Feature engineering converts cleaned data into signals models can learn from. This may involve aggregating events into user-level metrics, encoding categorical variables, generating embeddings from text or images, or computing rolling statistics over time windows.

In production environments, feature engineering is rarely ad hoc. Teams use feature stores to standardize definitions, ensure consistency between training and inference, and enable reuse across models. This reduces technical debt and prevents subtle training-serving skew that can invalidate predictions.

4. Model Training

The training stage fits models using prepared features. This often includes dataset splitting, candidate model training, hyperparameter optimization, and artifact logging.

Training may run on fixed schedules, be triggered by new data volume, or respond to performance degradation detected in monitoring. Importantly, training outputs are versioned and tracked so teams can reproduce results, audit decisions, and roll back when necessary.

5. Evaluation, Governance & Approval

Before deployment, models must be evaluated against technical, business, and ethical criteria. This includes accuracy metrics, stability checks, fairness assessments, and business constraints such as cost or risk thresholds.

Many pipelines enforce approval gates at this stage. If a model fails to meet predefined standards, deployment is blocked automatically. This ensures governance is built into the pipeline rather than enforced retroactively.

6. Deployment

Deployment packages approved models into environments where they can generate predictions for real systems. Depending on use case, this may involve batch scoring, real-time APIs, or streaming inference.

Orchestration frameworks coordinate model serving with upstream data pipelines and downstream applications. Reliability, latency, and rollback strategies are critical here—deployment failures directly impact business workflows.

7. Monitoring, Drift Detection & Retraining

Once deployed, the pipeline shifts into continuous oversight. Monitoring tracks data drift, model performance, operational health, and cost. When metrics degrade or distributions shift, retraining workflows are triggered to restore performance.

This final stage turns AI from a static artifact into a living system that adapts as conditions change.

AI Pipeline Stages at a Glance

Best Practices for Designing AI Pipeline Workflows

1. Design Pipelines as Code—Not One-Off Scripts

Production pipelines must be versioned, testable, and reviewable. Treating pipelines as code ensures reproducibility, collaboration, and accountability. Workflow definitions stored in Git allow teams to track changes, audit decisions, and roll back safely. This discipline prevents institutional knowledge from being trapped in notebooks or individual machines.

2. Create Strong Stage Boundaries With Clear Contracts

Each pipeline stage should expose explicit inputs and outputs. These contracts make systems modular and reduce cascading failures. When boundaries are clear, teams can iterate on models without disrupting ingestion, or replace feature logic without breaking deployment. Debugging becomes faster because failures are isolated.

3. Bake Monitoring Into the Pipeline From Day One

Monitoring is not an optional add-on. Pipelines should emit metrics for data quality, performance, latency, and errors at every stage. Alerting systems must notify teams before failures impact users. Feedback loops that capture ground truth enable retraining and continuous improvement. Without monitoring, pipelines silently decay.

4. Align Pipeline Behavior With Business SLAs and Workflow Requirements

Pipelines exist to support business workflows. Real-time customer interactions require low-latency inference; financial reporting may tolerate batch delays. Understanding these constraints upfront informs infrastructure choices, orchestration strategies, and cost trade-offs. Successful pipelines are engineered backward from workflow needs, not forward from tools.

5. Plan for Evolution, Not Just Deployment

AI systems evolve as data grows, markets shift, and models improve. Pipelines must support schema changes, new signals, and model upgrades without full rewrites. Modular design, standardized interfaces, and extensible orchestration logic protect long-term velocity and reduce re-engineering costs.

AI Pipeline Workflow vs. AI Workflow Automation

Conclusion

AI pipeline workflows are the operational backbone of production AI. They transform scattered data and experimental models into dependable systems that power real decisions at scale. When designed well, pipelines reduce risk, accelerate iteration, and enable AI workflows to remain accurate and trustworthy over time.

As AI becomes embedded in everyday work, platforms like Kuse play a complementary role by bringing pipeline outputs—summaries, predictions, insights—directly into collaborative workspaces. While pipelines handle ingestion, training, and monitoring behind the scenes, Kuse surfaces intelligence where people actually work, bridging the gap between machine learning infrastructure and human decision-making.

In modern AI systems, pipelines make intelligence possible—but thoughtful integration makes it usable.