How Human in the Loop AI Actually Works

Human in the loop AI keeps people in the decision chain where algorithms reach their limits. Here's how HITL works and why it matters.

Human in the loop AI is a model where people actively participate in training , operating , or supervising an artificial intelligence system rather than letting it run autonomously.

The concept comes from military , aviation , and nuclear energy contexts where automated systems needed human intervention capability to prevent disasters. A pilot can override autopilot. A controller can abort a launch sequence. The human stays in the decision chain.

AI borrowed this framework because machine learning models face similar problems. They work brilliantly within their training parameters. They struggle with edge cases , ambiguity , and situations their training data didn't cover. Human in the loop addresses this gap by inserting human judgment where algorithms reach their limits.

The approach recognizes something important. AI shouldn't replace humans. AI should augment human capabilities. The combination outperforms either working alone.

How Human in the Loop AI Actually Works

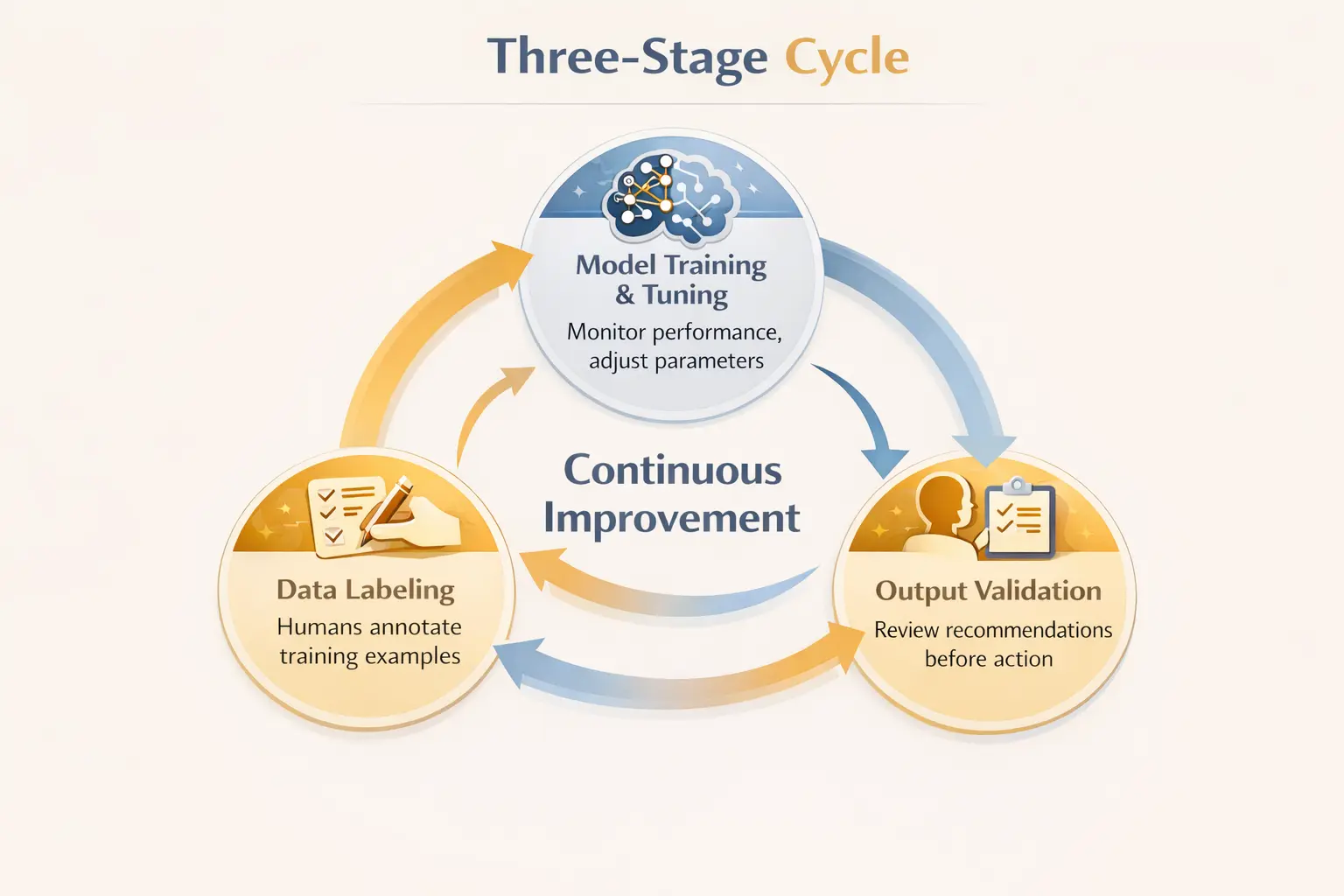

HITL creates a feedback cycle between humans and machines. This cycle operates across three stages of the AI lifecycle.

The first stage involves data labeling. Machine learning models learn from examples. Someone must label those examples correctly. In supervised learning , humans annotate training data to teach the model what "spam" versus "not spam" looks like , or which pixels in an image represent a tumor versus healthy tissue. According to IBM , this human annotation can be slow and expensive for large datasets , but it creates the foundation that makes machine learning possible.

The second stage involves model training and tuning. Humans monitor how well the model performs. They score outputs. They identify where predictions go wrong. They adjust parameters. The model improves based on this human feedback rather than just grinding through more data.

The third stage involves output validation. Before AI recommendations reach end users or trigger actions , humans review them. A doctor checks an AI diagnosis. A content moderator reviews flagged posts. A financial analyst validates algorithmic trading signals. Human oversight catches errors before they cause harm.

This three-stage involvement creates continuous improvement. The model gets better. Human reviewers learn which errors to watch for. The system as a whole becomes more reliable than either component alone.

Why Human in the Loop Matters for AI Systems

Several forces make HITL essential rather than optional.

AI models embed biases from their training data. Historical hiring data reflects past discrimination. Medical datasets underrepresent certain populations. Financial models perpetuate existing inequalities. Human reviewers can identify when outputs reflect these biases and intervene before biased recommendations reach affected people.

Edge cases defeat purely automated systems. Real-world situations don't always match training scenarios. A self-driving car encounters weather conditions it's never seen. A medical AI faces a symptom combination outside its training set. An ATM's visual recognition fails to read a handwritten check. Human in the loop provides fallback capability when automation reaches its boundaries.

Accountability requires human involvement. When AI makes mistakes , someone must be responsible. Purely autonomous systems create accountability gaps. HITL ensures humans remain in the decision chain for consequential choices , preserving clear lines of responsibility.

Some decisions require ethical reasoning that algorithms can't provide. Human AI collaboration becomes essential when choices involve values , tradeoffs , or context that can't be reduced to optimization functions. Humans bring judgment. AI brings processing power. Together they handle problems neither could solve alone.

Human in the Loop Across Industries

HITL appears wherever AI touches high-stakes decisions.

Healthcare provides clear examples. AI can analyze medical images , suggest diagnoses , and recommend treatments. But physicians review these outputs before acting on them. A Nature Medicine editorial noted that 58% of physicians worry about over-reliance on AI for diagnosis. HITL addresses this concern by keeping clinical judgment central while using AI to enhance diagnostic capabilities.

Content moderation combines AI detection with human review. Algorithms flag potentially problematic content at scale. Human moderators make final decisions on ambiguous cases where context matters. The volume requires automation. The nuance requires human judgment.

Financial services use HITL for fraud detection and compliance. AI systems flag suspicious transactions. Human analysts investigate flags before freezing accounts or reporting activity. False positives would harm innocent customers. False negatives would enable crime. Human review balances these risks.

Autonomous vehicles represent evolving HITL implementation. Current systems require human drivers to take control in certain situations. The AI handles routine driving. Humans handle exceptions. As technology improves , the boundary shifts , but human oversight remains part of the safety architecture.

Customer service increasingly combines AI chatbots with human escalation. Bots handle routine inquiries efficiently. Complex or sensitive issues transfer to human agents. The hybrid approach serves more customers while preserving human connection where it matters most.

The Challenges of Human in the Loop

HITL isn't without drawbacks.

Human involvement creates bottlenecks. AI can process thousands of items per second. Human review processes items per minute at best. Scaling HITL means either hiring many reviewers or accepting that only a sample of AI outputs receive human attention.

Cost increases significantly. Human annotation for large datasets requires thousands of labor hours. Expert reviewers in specialized domains like medicine or law cost even more. Organizations must balance accuracy gains against budget constraints.

Human reviewers make mistakes too. Fatigue , distraction , and cognitive biases affect human judgment. Over-reliance on AI recommendations can dull human attention. The Nature Medicine piece noted concerning research showing that endoscopists who used AI assistance for three months saw their own detection rates decline after stopping. Human skills can atrophy when AI handles too much.

Privacy concerns arise when humans review sensitive data. Medical records , financial information , personal communications. Human reviewers accessing this data creates additional exposure risk beyond what automated systems alone would create.

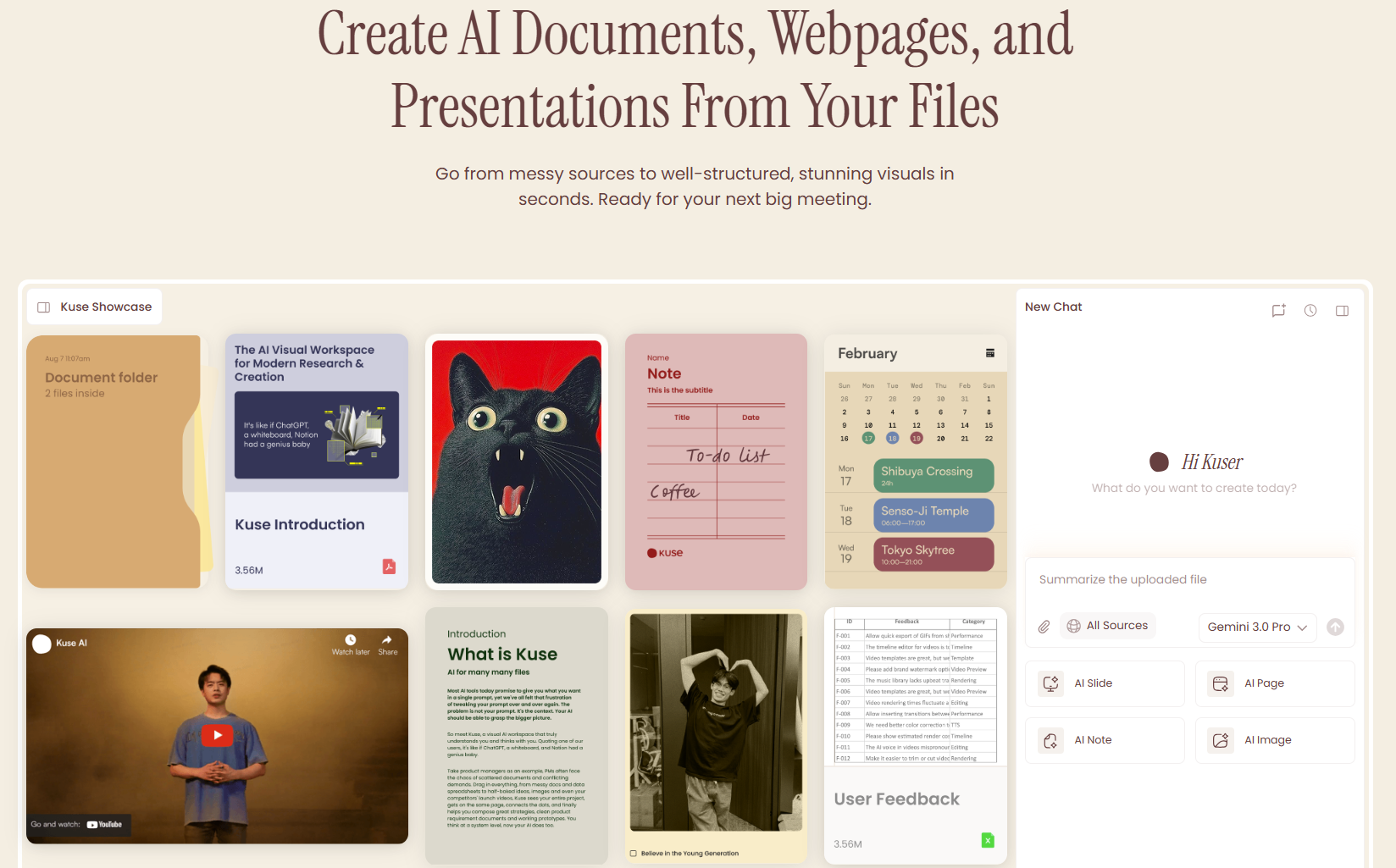

Where Kuse AI Fits Human in the Loop Workflows

Human in the loop AI generates knowledge at every stage. Annotation guidelines. Model performance notes. Edge case documentation. Reviewer feedback. Decision rationale for overridden outputs.

This knowledge scatters across spreadsheets , training documents , internal wikis , and individual reviewers' notes. Finding what you need becomes its own challenge. Why did we label this category that way? What edge cases has this model struggled with? How should reviewers handle this ambiguous situation?

Kuse organizes this operational knowledge so HITL teams find answers without digging through scattered documentation. When a new reviewer needs annotation guidelines , they're accessible. When model retraining requires documented edge cases , the examples exist in one place. When someone questions a labeling decision , the rationale is findable.

Human in the loop workflows run smoother when the knowledge they generate stays organized and accessible.

Conclusion

Human in the loop AI represents a practical middle ground between full automation and manual processes. Machines handle scale and consistency. Humans provide judgment , ethics , and edge case handling.

The approach works because it plays to the strengths of both. AI processes vast amounts of data quickly. Humans catch what automation misses. The feedback between them improves the system continuously.

HITL isn't a temporary stage before full AI autonomy. It's a sustainable model for domains where decisions matter too much to leave entirely to algorithms. Healthcare diagnosis. Content moderation. Financial compliance. Autonomous vehicle safety. These areas will likely always involve humans in the loop because the stakes demand human judgment as a backstop.

The question isn't whether to keep humans in the loop. The question is how to design that involvement effectively. Where in the workflow? How much review? What training for reviewers? What tools to support them?

Organizations that answer these questions well get the benefits of AI capability with the reliability of human oversight. Those that don't risk either automation failures or unsustainable review bottlenecks.